Ultimate Guide On Multi-View Image Annotation For Custom Training Datasets in Computer Vision

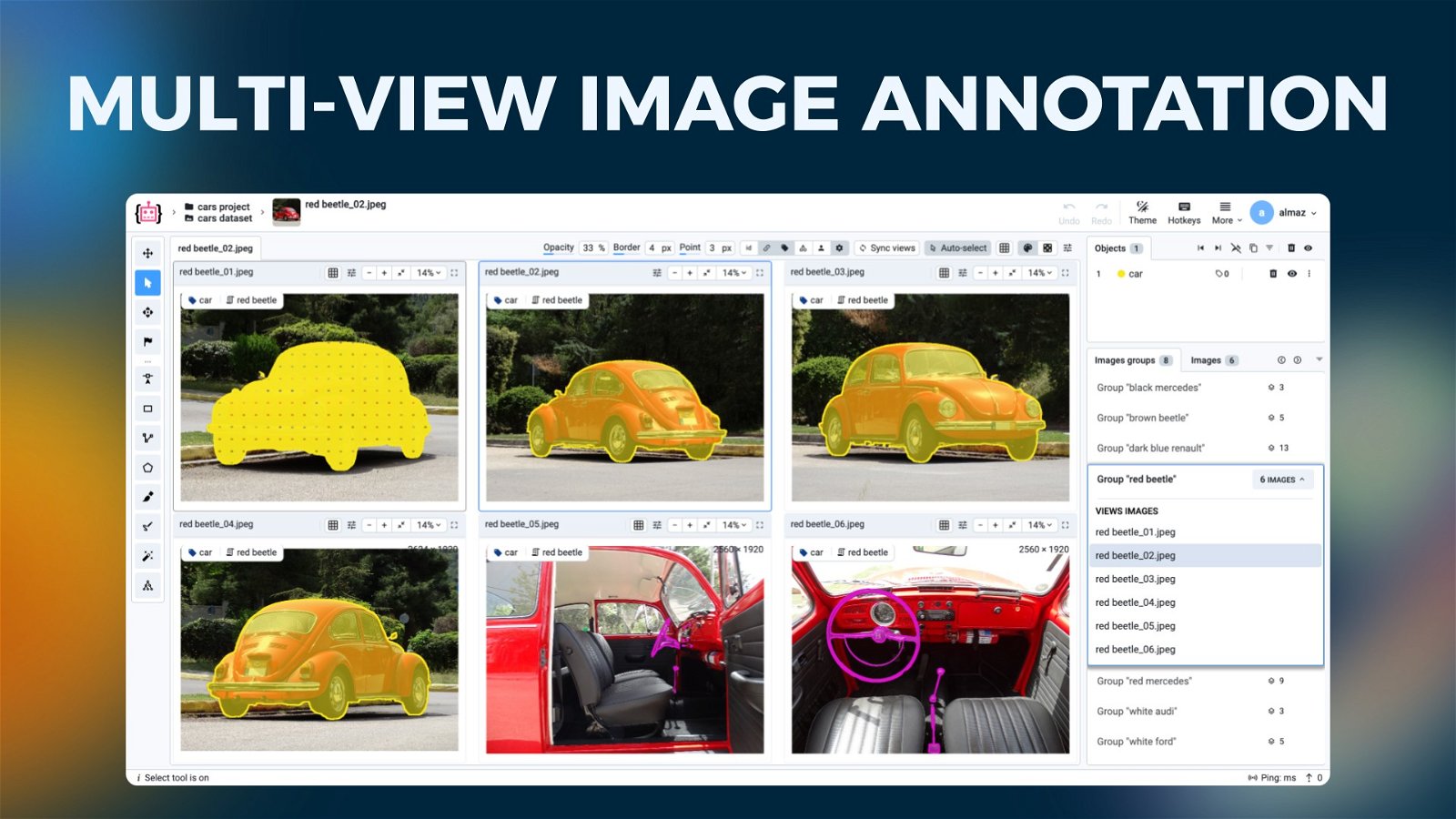

Learn how to use Supervisely's Multi-View Image Labeling Toolbox to upload and annotate multi-view images simultaneously.

Table of Contents

Introduction

In this tutorial, you will learn how to annotate multi-view images in Supervisely Image Labeling Toolbox. We will explain all the main features of multi-view image labeling that will help you to streamline the data annotation process, allowing simultaneous annotation of multiple images on a single screen, saving time and effort on building custom training datasets for your production Computer Vision models.

Supervisely is an excellent choice for multi-view image annotation tasks. It offers a range of features that make the annotation process more efficient and user-friendly:

-

Powerful Image Labeling Toolbox that is perfectly tailored for multi-view image annotation tasks. Learn more about the features of Supervisely Image Labeling Toolbox in our Complete Labeling Toolbox overview.

-

Synchronized views and labeling in Multi-View mode to annotate objects on multiple images simultaneously on one screen. This feature is especially useful for annotating multi-spectral images as a special case of multi-view annotation.

-

Any number of images in groups – the number of images in groups may vary according to your needs. You can group images by different criteria, such as camera angles, object classes, or any other property.

-

Well optimized manual tools to annotate multi-view images: Bounding Box, Polygon, Mask Pen, Brush, Polyline, and Graph (keypoints). For segmentation task you can check our comprehensive overview of segmentation techniques.

-

Interactive AI-assisted segmentation with Smart Tool to speed up the labeling process.

-

Developer-friendly Python SDK for automation and integrations. Check out the development guide on multi-view images in our Developer Portal to learn more about the Python SDK and how to automate your annotation tasks.

-

Plenty of apps from the Ecosystem – open-source web-based python applications that provide a wide range of features, from data import to model training, allowing you to enhance your workflow and customize your pipeline even with using neural networks.

What are multi-view images?

Multi-view images are the collection of images of objects or scenes captured from different perspectives or cameras. These images provide a comprehensive view of objects or scenes, offering a more detailed understanding of their structure and features. Multi-view images are widely used in various industries, including autonomous vehicles, 3D reconstruction, medicine, multispectral imaging, 3D mapping, and more.

The Supervisely Image Labeling Toolbox has special multi-view labeling mode with additional functionalities to synchronize views and labels on multi-view images, allowing annotators to zoom, pan, navigate and annotate multiple images simultaneously on a single screen.

Multi-view Annotation in Various Industries

Multi-view annotations are found to be useful in various industries, including:

- Multispectral imaging: a special case of multi-view imaging. Check out our guide How to Annotate Multispectral Images to learn more about the benefits and applications of multispectral imaging in agriculture, environmental monitoring, and more.

- Depth estimation: estimating the depth of objects in a scene.

- Insurance industry: identifying object parts and damages from multiple perspectives.

- 3D reconstruction: creating a 3D model of a scene from multiple images.

- Autonomous vehicles: training models for object detection, lane recognition, and obstacle avoidance.

- Traffic monitoring systems: tracking and analyzing traffic patterns.

- 3D mapping and GEO localization.

- Medical imaging: identifying body organs, tissues, tumors and other abnormalities.

- Agriculture: monitoring weeds, crops and other plants from multiple perspectives for plant structure recognition and analysis, or disease detection

Below, we have outlined some examples along with accompanying multi-view image examples and annotation samples.

1. Depth Estimation

Exploring the Precision of Depth Estimation with Multi-View Image Annotation can be highly beneficial. Images [1] and [2] from Middlebury Stereo 2001 Datasets illustrate how the same objects exhibit varying positions depending on the camera perspective. This enables the assessment of image depth and the construction of a segmented map with zones corresponding to different distances.

![[1] Exploring Depth Estimation Precision in Middlebury Stereo 2001 Datasets.](https://cdn.supervisely.com/blog/multi-view-image-annotation/pose-estimation-img.jpg?width=800) [1] Exploring Depth Estimation Precision in Middlebury Stereo 2001 Datasets.

[1] Exploring Depth Estimation Precision in Middlebury Stereo 2001 Datasets.

[2] Animation (all images are warped to the reference frame viewpoint), source

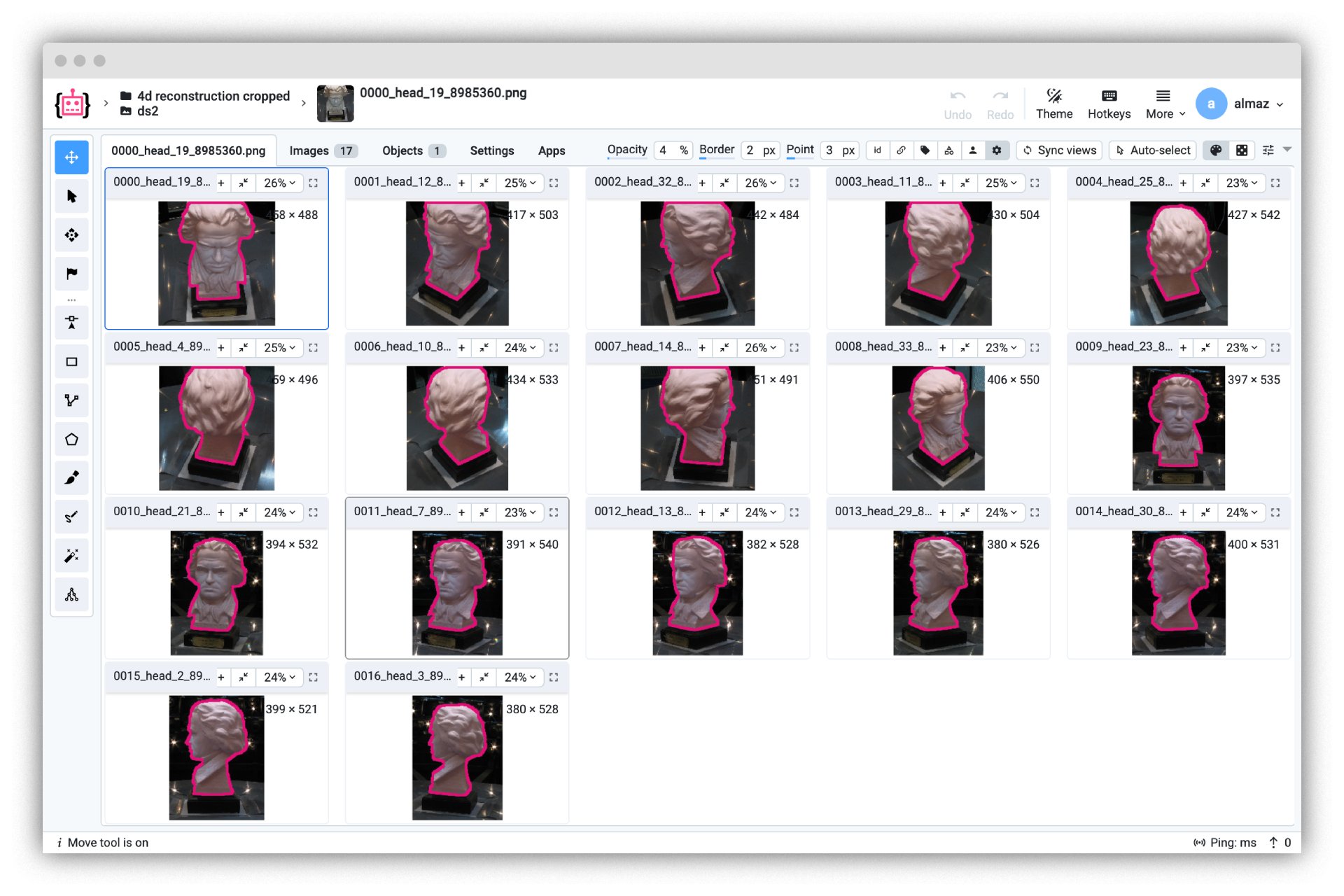

2. 3D Reconstruction

Images [3] and [4] from the Multi-View 3D Reconstruction dataset visualize an example of 3D reconstruction (Continuous Ratio Optimization via Convex Relaxation with Applications to Multiview 3D Reconstruction, Paper by Kalin Kolev and Daniel Cremers), where the goal is to derive a scene's geometric structure from a series of images, utilizing known or estimable camera parameters. The process involves solving a pixel-wise correspondence problem, leveraging multiple images for the (partial) recovery of 3D information. The accuracy is enhanced by capitalizing on the varied perspectives in multiple images, contributing to a comprehensive 3D representation from 2D image collections. In this context, Multi-view image annotation becomes a valuable companion, enhancing the training of machine learning models, and allowing for a more robust understanding of object features and spatial structures.

[3] Multi-view image annotation in Supervisely Image Labeling Tool, dataset source

[4] Continuous Ratio Optimization via Convex Relaxation with Applications to Multiview 3D Reconstruction, Paper by Kalin Kolev and Daniel Cremers, source

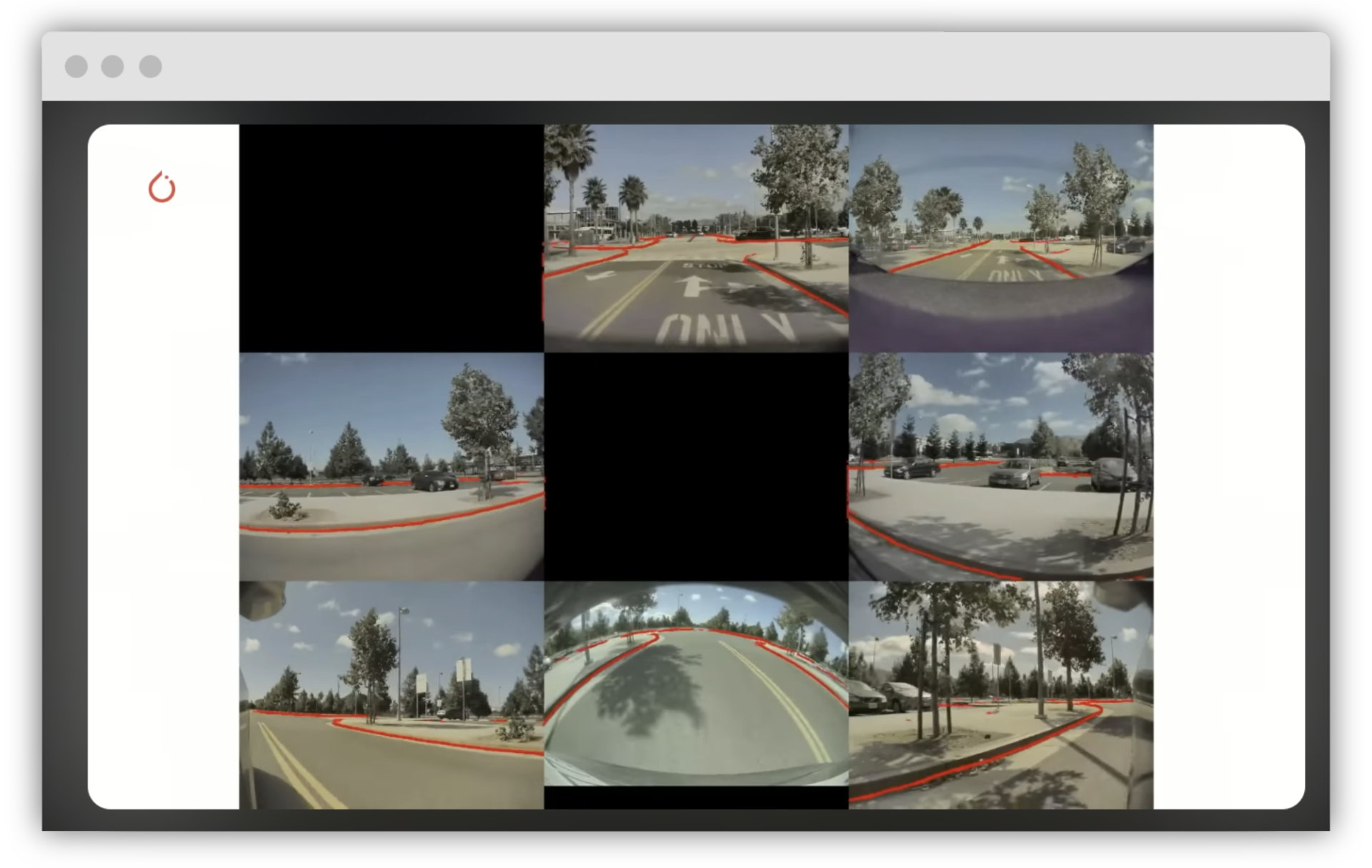

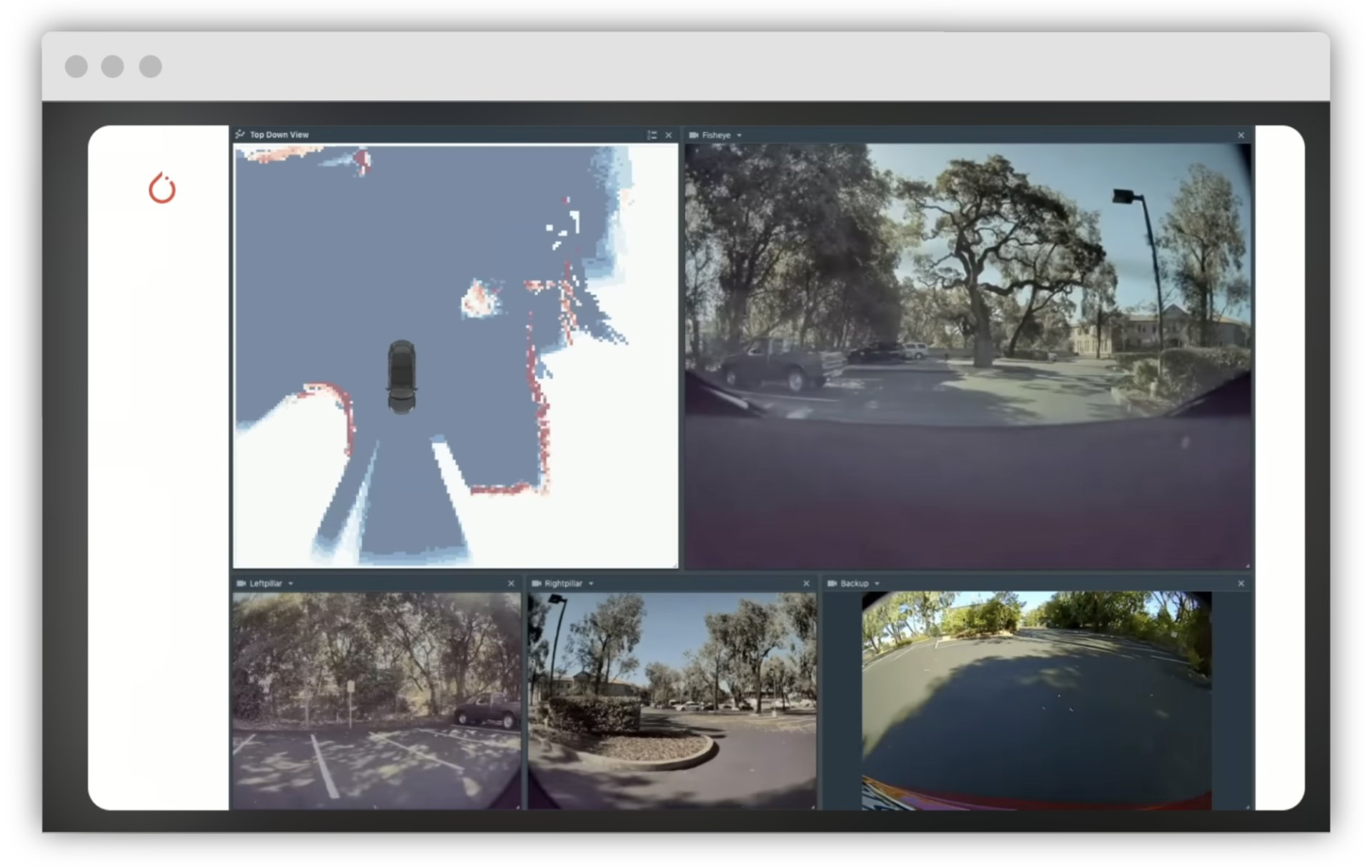

3. Multi-View for Autonomous Vehicles

In the realm of autonomous vehicles, accurate perception of the environment is paramount for safe and efficient navigation. Multi-view image annotation plays a pivotal role in training machine learning models for object detection, lane recognition, and obstacle avoidance. Emulation of real-world driving scenarios enhances the model's ability to generalize and respond effectively to diverse road conditions, ensuring the safety of passengers and pedestrians.

[5] Multi-View from the 8 cameras, source

[6] Birds Eye View from multi-view 2D images, source

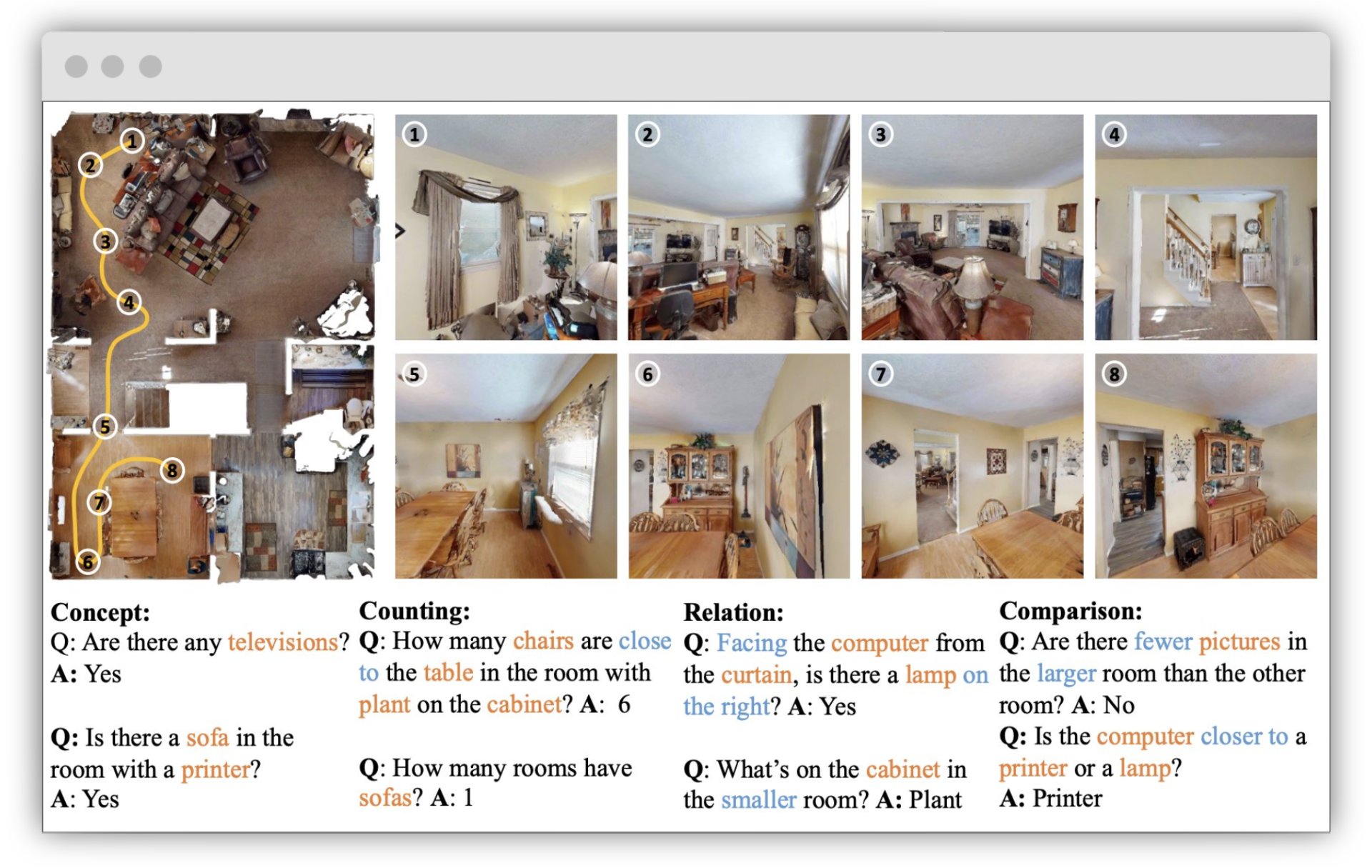

4. 3D Concept Learning and Reasoning

Image [7] illustrates the groundbreaking framework from the 3D Concept Learning and Reasoning from Multi-View Images, with an approach, where the integration of multi-view consistency becomes the first step in scene understanding and geometric learning.

[7] 3D Concept Learning and Reasoning from Multi-View Images, Paper by Zhihao Xia, Sifei Liu, Cewu Lu, source

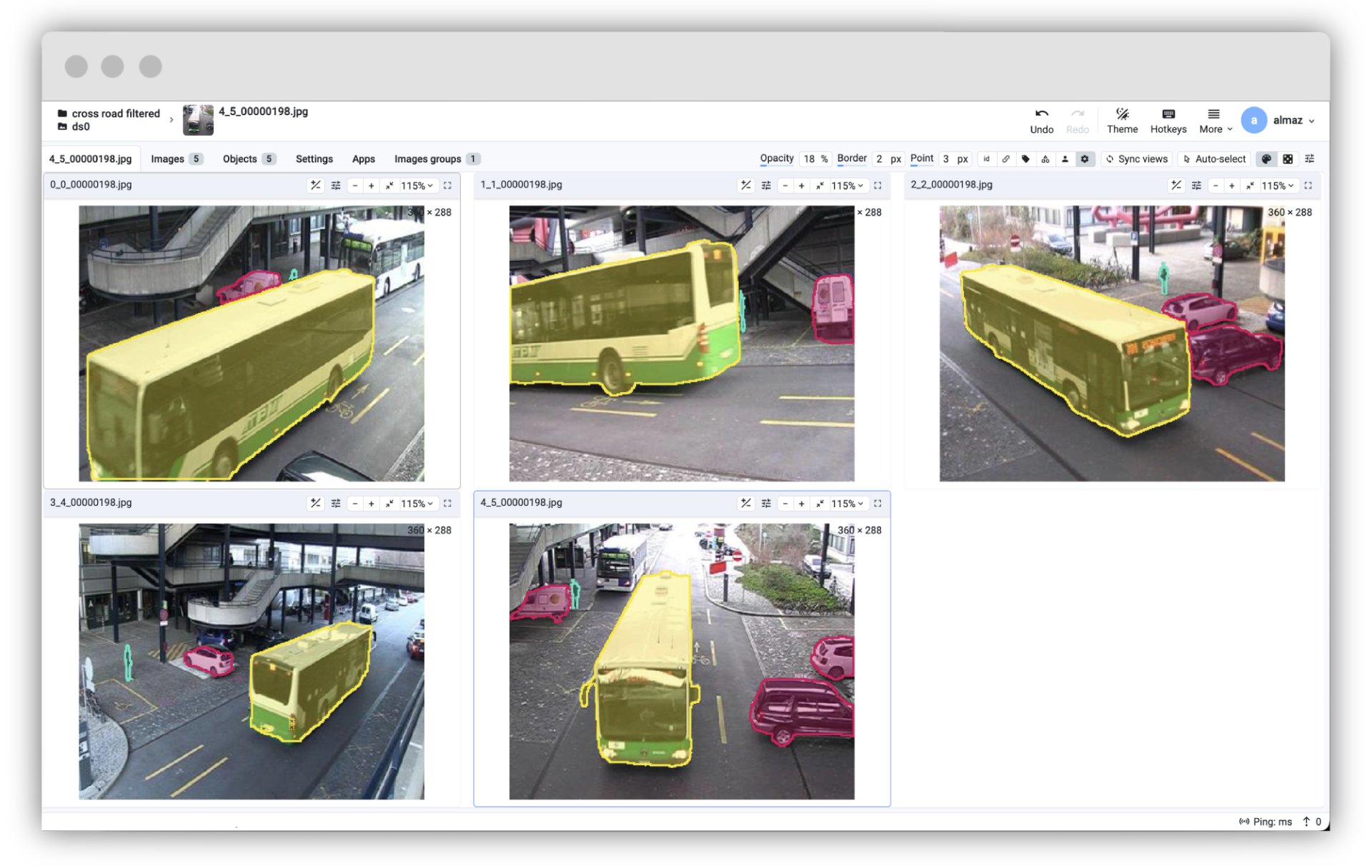

5. Traffic Monitoring systems

Multi-view image annotation is also a valuable tool for traffic monitoring systems, enabling the creation of datasets that capture diverse road conditions. This facilitates the training of machine learning models for object detection, classification, and tracking, enhancing the accuracy and robustness of traffic monitoring systems. Image [8] from the Multi-view Multi-class Detection dataset demonstrates an example of how using multi-view image annotation can be beneficial for traffic monitoring systems on scenes from different angles where some details are not visible from one camera but visible from another.

[8] Multi-View for traffic monitoring, source

6. 3D Mapping and GEO localization

Image [9] from the University-1652: A Multi-view Multi-source Benchmark for Drone-based Geo-localization benchmark showcases the potential of multi-view image annotation in drone-based geo-localization and navigation.

[9] University-1652: A Multi-view Multi-source Benchmark for Drone-based Geo-localization, source

How to annotate multi-view images in Supervisely

Let's dive into the step-by-step guide on how to use multi-view image annotation in Supervisely. We will cover the following topics:

- structuring multi-view images

- importing multi-view images

- multi-view images annotation

- exporting your data in various formats

- organizing collaboration with your team

- converting existing project to multi-view setup

- easy integration with Python SDK for data workflow automation

Illustrative use case

Task description: Let's say you have a dataset with 600 images: 100 scenes, each with 6 images with a car captured from various angles. It is required to annotate objects and their parts (like doors, windows, wheels, etc) on the images from all cameras.

Challenges:

- lack of tools with the feature of annotating all images on one screen

- need to use both manual and AI-assisted segmentation tools

- time-consuming process of switching between images and classes

Solution: Supervisely's is the perfect full-stack solution for this task. It offers a range of image annotation, data management and neural network features that make the annotation process more efficient and user-friendly:

- synchronized views and labeling in Multi-View mode to annotate multiple images simultaneously on one screen

- various optimized tools for manual annotation: Bounding Box, Polygon, Mask Pen, Brush, Polyline, and Graph (keypoints). Check our comprehensive overview of Annotation Techniques in image segmentation.

- convenient tools for labeling multiple parts of the object using an efficient splitting annotation technique. Learn more about it in our blog post

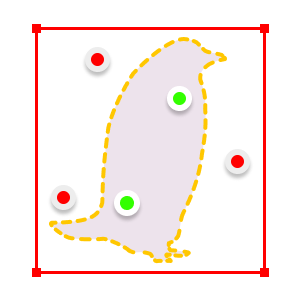

- using free pre-trained models or training your custom model for interactive AI-assisted segmentation with the Smart Tool. Check out our Smart Tool guide to learn more about

- interactive AI-assisted segmentation with the Smart Tool. Use free pre-trained models or train your custom model to speed up the annotation process. Check out our guide on how to use Smart Tool to annotate images with AI assistance.

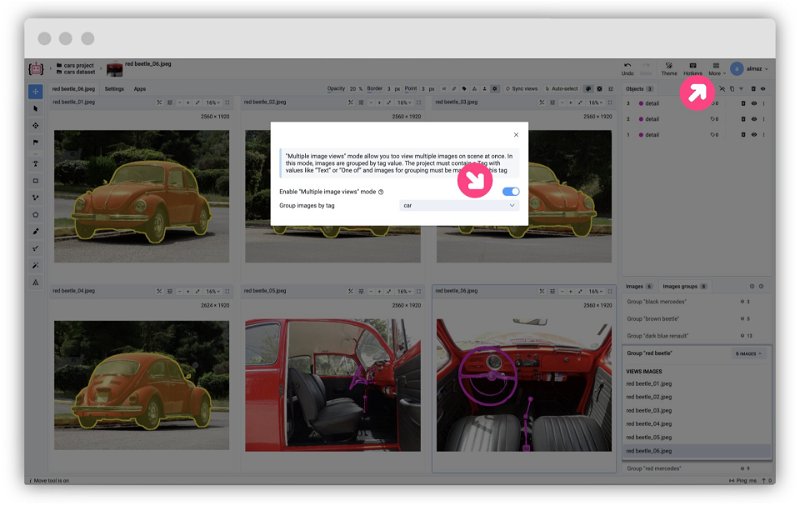

With activated Multiple Image View Mode in the Supervisely Image Labeling Tool, you can annotate all images from all cameras on one screen, saving time and effort on building custom training datasets for your production Computer Vision models.

Look how convenient and intuitive it is, and in this tutorial, we'll learn how to use it.

Step 1: how to structure multi-view images

Organize your images into a simple project structure according to the following example below:

📂 dataset_name

┣ 📂 group_name_1

┃ ┣ 🏞️ image_1.png

┃ ┣ 🏞️ image_2.png

┃ ┗ 🏞️ image_3.png

┗ 📂 group_name_2

┣ 🏞️ image_4.png

┣ 🏞️ image_5.png

┣ 🏞️ image_6.png

┣ 🏞️ image_7.png

┗ 🏞️ image_8.png

We have prepared 🔗 demo data for you, so it will help you to quickly reproduce the tutorial without a headache and get an experience and clear understanding of all the steps in this tutorial.

In this example, we have 2 groups of images: group_name_1 (3 images) and group_name_2 (5 images). When you import this data, the application will automatically assign predefined tags to these images with group_name_1 and group_name_2 values respectively. Then, the application will group images by these tag values. It's so simple to prepare your multi-view data right in your local directory.

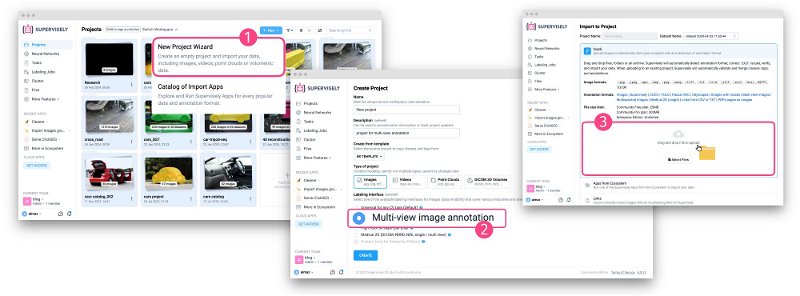

Step 2: how to import multi-view images

You have 2 options to import multi-view images in Supervisely:

- Option 1: Use our Import Wizard to create a new project with multi-view settings and import images with groups automatically. It's a simple and intuitive way to import multi-view images.

Upload multi-view images

Upload multi-view images

- Option 2: use the Import images groups application to import multi-view images.

Import images groups

Import images groups connected via user defined tag

At any moment, you can enable or disable multi-view display in the Labeling Tool by clicking the More button and selecting Multiple Image Views Mode. After reloading the page, the setting will be reset to the default. If you want to save the setting, you need to change it in the project settings.

Enable multi-view in the Labeling Tool

Enable multi-view in the Labeling Tool

Step 3: how to annotate multi-view images

Just a few simple steps and you are ready to start annotating your multi-view images in Supervisely. Now you can use the Labeling Tool to annotate multiple images simultaneously on one screen without switching between images.

You can use various tools:

-

Manual tools for annotation purposes or to easily correct some cases: Bounding Box, Polygon, Mask Pen, Brush, Polyline, and Graph (keypoint)

-

Multi-view mode tools: zoom, pan, and synchronized labeling of multiple images simultaneously on a single screen. For example, you can easily enable/disable filling the whole screen with a specific image or zoom in for more detailed annotation in specific images or all images simultaneously. Navigation between multi-view images is also available using the

LEFTandRIGHTarrow keys. -

Assign tags to images or objects for your needs. Check our Complete Guide about Tags for classification in Computer Vision tasks.

-

✨ AI-assisted Smart Tool to speed up the annotation process.

Combine the power of AI and grouped displaying to annotate images faster and more efficiently. Connect your computer with GPU and utilize popular pre-trained models for the Smart Labeling tool to improve efficiency.

The Smart Tool is a powerful tool that allows you to annotate images with AI assistance. It offers users the opportunity to utilize a variety of neural network algorithms integrated within the Supervisely platform. This encompasses robust models like RITM, Segment Anything, and more, with ongoing efforts to enhance our Ecosystem through the integration of new models. It's essential to emphasize that the effectiveness, precision, and speed of segmentation are strongly influenced by the selection of the model. Therefore, we recommend that you try out different models to find the one that best suits your needs.

Read the guide on how to use the Smart Tool to segment images with model assistance.

RITM interactive segmentation SmartTool

State-of-the art object segmentation model in Labeling Interface

Serve Segment Anything Model

Deploy model as REST API service

Serve ClickSEG

Deploy ClickSEG models for interactive instance segmentation

EiSeg interactive segmentation SmartTool

SmartTool integration of Efficient Interactive Segmentation (EISeg)

Serve Segment Anything in High Quality

Run HQ-SAM and then use in labeling tool

Integrated models in Supervisely

You can also train your model and use it in the Smart Tool. Explore blog posts dedicated to this topic:

- How to Train Smart Tool for Precise Cracks Segmentation in Industrial Inspection

- Automate manual labeling with a custom interactive segmentation model for agricultural images

- Unleash The Power of Domain Adaptation - How to Train Perfect Segmentation Model on Synthetic Data with HRDA

- Lessons Learned From Training a Segmentation Model On Synthetic Data

Step 4: how to export multi-view annotations

After labeling multi-view images, you can easily export your annotations in various formats, such as Supervisely JSON, COCO, and YOLO formats.

At any moment, you can simply reimport your data back to Supervisely platform. To preserve the grouping, you need to export and import the data in the Supervisely format. Learn more about the Supervisely JSON format.

Export to COCO

Converts Supervisely to COCO format and prepares tar archive for download

Export to YOLOv8 format

Transform Supervisely format to YOLOv8 format

Step 5: how to organize collaboration

How else can you speed up the annotation process?

✅ Create a team, invite your colleagues to the labeling jobs, and work together on the same project.

Check out our blog posts on how to effectively perform annotation at scale using Labeling Jobs, Labeling Queues and Labeling Consensus approaches.

Labeling Jobs and other collaboration tools in Supervisely helps to organize efficient work and complete tasks like:

-

Job management - the need to describe a particular task: what kind of objects to annotate and how

-

Progress monitoring - tracking annotation status and reviewing submitted results

-

Access permissions - limiting access only to specific datasets, classes, and tags within a single job

-

And what's more, you can take a screenshot for urgent tasks without using additional apps and quickly share the link.

Step 6 (optional): how to convert existing project to multi-view setup

If you already have images in your project and want to group them, you can use the Group images for multi-view labeling application.

The application organizes images into groups, adds grouping tags, and activates grouping and multi-tag modes in the project settings. The size of the groups is determined by the Batch size slider in the application window, and three grouping options are available: Group by Batches, Group by Object Class, and Group by Image Tags.

Just select the app from the context menu of the project or dataset, specify the grouping type, and press the Run button. The application will group images according to the specified criteria and activate multi-view mode in the project settings.

Note: Before running the app, be aware that it alters the applied project. All application actions can be manually reversed in the project settings, but it is advisable to create a project copy if you are concerned about the impact this app may have on the project.

Easy integration for Python developers

Automate processes with multi-view images using Supervisely Python SDK.

pip install supervisely

You can learn more about it in our Developer Portal, but here we'll just show how you can upload your image groups with just a few lines of code.

# enable multi-view display in project settings

api.project.set_multiview_settings(project_id)

images_paths = ['path/to/audi_01.png', 'path/to/audi_02.png']

# upload group of images

api.image.upload_multiview_images(dataset_id, "audi", images_paths)

In the example above we uploaded two groups of multi-view images. Before or after uploading images, we also need to enable image grouping in the project settings.

There's so much you can do with them using our Python SDK! You can find a set of Python SDK tutorials to work with images on our Developer Portal.

Summary

In this tutorial, we have covered the main features of multi-view image annotation in Supervisely. We have explained how to structure, import, annotate, and export multi-view images, as well as how to convert an existing project to a multi-view mode.

With Supervisely End-To-End Computer Vision platform, annotating multi-view images becomes much easier, and you can build custom training datasets and train your production Computer Vision models more efficiently withing a single web-based environment.

Supervisely for Computer Vision

Supervisely is online and on-premise platform that helps researchers and companies to build computer vision solutions. We cover the entire development pipeline: from data labeling of images, videos and 3D to model training.

The big difference from other products is that Supervisely is built like an OS with countless Supervisely Apps — interactive web-tools running in your browser, yet powered by Python. This allows to integrate all those awesome open-source machine learning tools and neural networks, enhance them with user interface and let everyone run them with a single click.

You can order a demo or try it yourself for free on our Community Edition — no credit card needed!